Social media algorithms, artificial intelligence, and our own genetics are among the factors influencing us beyond our awareness. This raises an ancient question: do we have control over our own lives?

This article by the University of Adelaide’s Lewis Mitchell and James Bagrow of the University of Vermont is part of ‘The Conversation’’s series on the science of free will.

Have you ever watched a video or movie because YouTube or Netflix recommended it to you? Or added a friend on Facebook from the list of “people you may know”?

And how does Twitter decide which tweets to show you at the top of your feed?

These platforms are driven by algorithms, which rank and recommend content for us based on our data.

As Woodrow Hartzog, a professor of law and computer science at Northeastern University, Boston, explains:

If you want to know when social media companies are trying to manipulate you into disclosing information or engaging more, the answer is always.

So if we are making decisions based on what’s shown to us by these algorithms, what does that mean for our ability to make decisions freely?

Social media algorithms, artificial intelligence, and our own genetics are among the factors influencing us beyond our awareness. This raises an ancient question: do we have control over our own lives? This article is part of The Conversation’s series on the science of free will.

Have you ever watched a video or movie because YouTube or Netflix recommended it to you? Or added a friend on Facebook from the list of “people you may know”?

And how does Twitter decide which tweets to show you at the top of your feed?

These platforms are driven by algorithms, which rank and recommend content for us based on our data.

As Woodrow Hartzog, a professor of law and computer science at Northeastern University, Boston, explains:

If you want to know when social media companies are trying to manipulate you into disclosing information or engaging more, the answer is always.

So if we are making decisions based on what’s shown to us by these algorithms, what does that mean for our ability to make decisions freely?

What we see is tailored for us

An algorithm is a digital recipe: a list of rules for achieving an outcome, using a set of ingredients. Usually, for tech companies, that outcome is to make money by convincing us to buy something or keeping us scrolling in order to show us more advertisements.

The ingredients used are the data we provide through our actions online – knowingly or otherwise. Every time you like a post, watch a video, or buy something, you provide data that can be used to make predictions about your next move.

These algorithms can influence us, even if we’re not aware of it. As the New York Times’ Rabbit Hole podcast explores, YouTube’s recommendation algorithms can drive viewers to increasingly extreme content, potentially leading to online radicalisation.

Facebook’s News Feed algorithm ranks content to keep us engaged on the platform. It can produce a phenomenon called “emotional contagion”, in which seeing positive posts leads us to write positive posts ourselves, and seeing negative posts means we’re more likely to craft negative posts — though this study was controversial partially because the effect sizes were small.

Also, so-called “dark patterns” are designed to trick us into sharing more, or spending more on websites like Amazon. These are tricks of website design such as hiding the unsubscribe button, or showing how many people are buying the product you’re looking at right now. They subconsciously nudge you towards actions the site would like you to take.

You are being profiled

Cambridge Analytica, the company involved in the largest known Facebook data leak to date, claimed to be able to profile your psychology based on your “likes”. These profiles could then be used to target you with political advertising.

“Cookies” are small pieces of data which track us across websites. They are records of actions you’ve taken online (such as links clicked and pages visited) that are stored in the browser. When they are combined with data from multiple sources including from large-scale hacks, this is known as “data enrichment”. It can link our personal data like email addresses to other information such as our education level.

These data are regularly used by tech companies like Amazon, Facebook, and others to build profiles of us and predict our future behaviour.

You are being predicted

So, how much of your behaviour can be predicted by algorithms based on your data?

Our research, published in Nature Human Behaviour last year, explored this question by looking at how much information about you is contained in the posts your friends make on social media.

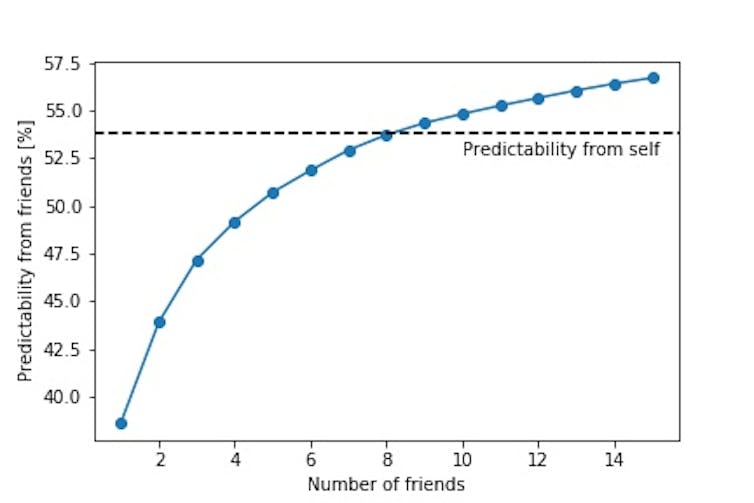

Using data from Twitter, we estimated how predictable peoples’ tweets were, using only the data from their friends. We found data from eight or nine friends was enough to be able to predict someone’s tweets just as well as if we had downloaded them directly (well over 50% accuracy, see graph below). Indeed, 95% of the potential predictive accuracy that a machine learning algorithm might achieve is obtainable just from friends’ data.

Bagrow, Liu, & Mitchell (2019)

Our results mean that even if you #DeleteFacebook (which trended after the Cambridge Analytica scandal in 2018), you may still be able to be profiled, due to the social ties that remain. And that’s before we consider the things about Facebook that make it so difficult to delete anyway.

We also found it’s possible to build profiles of non-users — so-called “shadow profiles” — based on their contacts who are on the platform. Even if you have never used Facebook, if your friends do, there is the possibility a shadow profile could be built of you.

On social media platforms like Facebook and Twitter, privacy is no longer tied to the individual, but to the network as a whole.

No more free will? Not quite

But all hope is not lost. If you do delete your account, the information contained in your social ties with friends grows stale over time. We found predictability gradually declines to a low level, so your privacy and anonymity will eventually return.

While it may seem like algorithms are eroding our ability to think for ourselves, it’s not necessarily the case. The evidence on the effectiveness of psychological profiling to influence voters is thin.

Most importantly, when it comes to the role of people versus algorithms in things like spreading (mis)information, people are just as important. On Facebook, the extent of your exposure to diverse points of view is more closely related to your social groupings than to the way News Feed presents you with content. And on Twitter, while “fake news” may spread faster than facts, it is primarily people who spread it, rather than bots.

Of course, content creators exploit social media platforms’ algorithms to promote content, on YouTube, Reddit and other platforms, not just the other way round.

At the end of the day, underneath all the algorithms are people. And we influence the algorithms just as much as they may influence us.

Lewis Mitchell, Senior Lecturer in Applied Mathematics and James Bagrow, Associate Professor, Mathematics & Statistics, University of Vermont

This article is republished from The Conversation under a Creative Commons license. Read the original article.

![The Neumann Talks [podcast]: UNSW’s Yudhi Bunjamin interviews past winners of the Australian Mathematical Society’s B.H. Neumann Prize](https://amsi.org.au/wp-content/uploads/bfi_thumb/dummy-transparent-n4tsnbrt7p21jca1hg0ra7zh0u175dp1h38u98jztk.png)